If you want to know how to check Kubernetes Cluster Health Status, then this blog will tell you everything.

When it comes to managing my Tanzu Kubernetes cluster, keeping tabs on its health status is a vital aspect of my DevOps strategy.

The Tanzu Kubernetes Grid Service provides valuable insights into the cluster‘s well-being by reporting various status conditions during the provisioning of a new Tanzu Kubernetes cluster.

In this guide, I’ll walk through the importance of monitoring the health of my Kubernetes cluster, highlighting how a proactive approach can help prevent errors before they lead to disruptions and optimize container performance based on current scalability needs.

Let’s dive in to learn about the health status of my Kubernetes cluster, ensuring smooth operations in my containerized environment.

About Cluster Health Conditions

When I delve into the details of my Tanzu Kubernetes cluster, I find a complex orchestration of moving parts, all orchestrated by independent yet interconnected controllers.

Together, they collaborate seamlessly to construct and uphold a cluster of kubernetes node health checks.

The key player in this ensemble is the TanzuKubernetesCluster object, which serves as a signal of insight into the health conditions of both the cluster and its individual machines.

A key part of keeping your cluster healthy involves the process to install cri-o ubuntu 22.04. This helps everything run smoothly and makes sure it works well with Kubernetes setups.

I am going to talk about the significance of understanding these cluster health conditions.

It will provide you with detailed information that becomes instrumental in maintaining the robustness and vitality of my Kubernetes environment.

As we explore the health status of my Kubernetes cluster, it’s important to note that getting certifications, such as the Certified Kubernetes Administrator (CKA), can really enhance your skills in handling Kubernetes environments.

So, keep an eye out for special offers and discounts with the Certified Kubernetes Administrator (CKA) Coupon Code to kickstart your journey towards mastery.

How Do I Check the Health of My Kubernetes Cluster?

To ensure the well-being of my Tanzu Kubernetes cluster, I employ a straightforward yet powerful method.

By executing the command kubectl describe cluster, I gain a comprehensive overview of the cluster’s health status.

If the status reflects “ready,” it signifies that both the cluster infrastructure and the control plane are in optimal condition.

NAME STATUS ROLES AGE VERSION

node-1 Ready <none> 10d v1.21.3

node-2 Ready <none> 10d v1.21.3

node-3 Ready <none> 10d v1.21.3However, if any of the cluster conditions are reported as “false,” it indicates that the cluster is not ready. In such cases, a message field precisely outlines the issue.

For instance, if the infrastructure is not ready:

NAME STATUS ROLES AGE VERSION

node-1 Ready <none> 10d v1.21.3

node-2 NotReady <none> 10d v1.21.3

node-3 Ready <none> 10d v1.21.3In the case of an unready cluster, the next step is to diagnose the issue with the cluster infrastructure.

To do so, I execute kubectl describe wcpcluster to gain specific details about what might be causing the problem.

This method allows me to swiftly identify and address any issues, ensuring the seamless functionality and health of my Tanzu Kubernetes cluster.

Mastering troubleshooting techniques is a key part of learning the CKA curriculum.

Running Health Checks with the Kubernetes Server API Endpoints

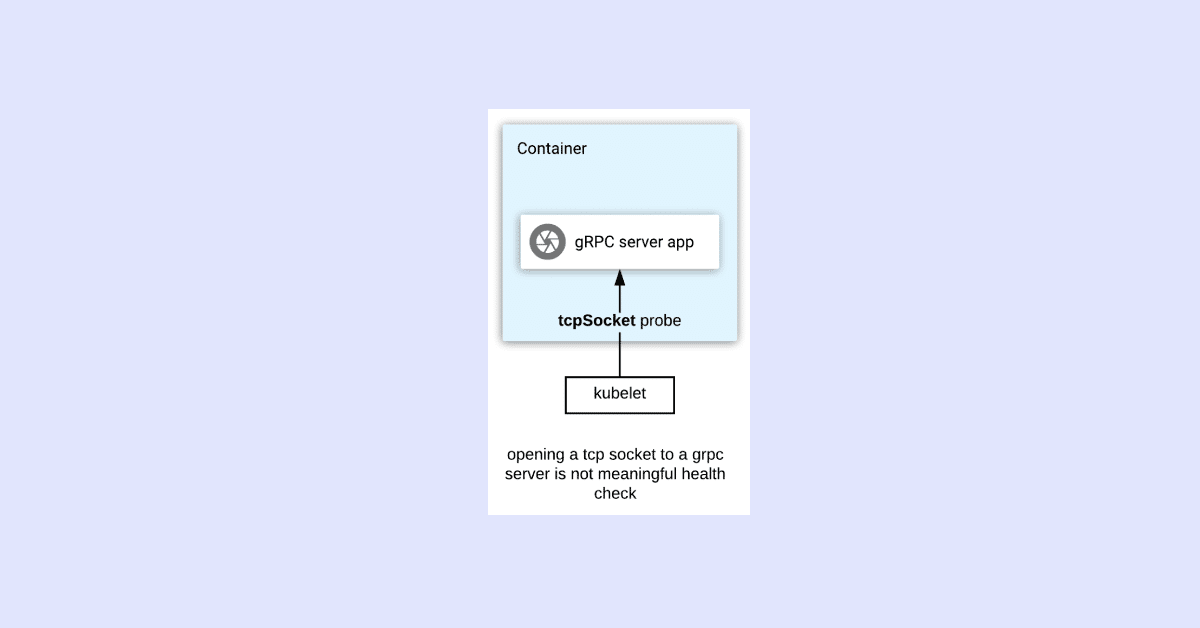

To check Kubernetes Cluster health status in Kubernetes, the server provides three API endpoints:

- Healthz: This determines if the app is running, but it’s been deprecated since v1.16.

- Livez: This can be used with the –livez-grace-period flag to assess startup duration.

- Readyz: Relaunches containers if terminated.

When checking these endpoints, the HTTP status code matters. A status code “HTTP 200” indicates the API server is healthy, live, or ready, depending on the endpoint. Similarly status code “HTTP 500” is considered an error.

For manual debugging, developers can use the following command with the verbose parameter:

kubectl get --raw='/readyz?verbose'The output provides detailed status information for the endpoint, including checks like ping, log, etc., and various post-start hooks. A “healthz check passed” message confirms the server’s health.

The output looks like the following:

[+]ping ok

[+]log ok

[+]etcd ok

[+]poststarthook/start-kube-apiserver-admission-initializer ok

[+]poststarthook/generic-apiserver-start-informers ok

[+]poststarthook/start-apiextensions-informers ok

[+]poststarthook/start-apiextensions-controllers ok

[+]poststarthook/crd-informer-synced ok

[+]poststarthook/bootstrap-controller ok

[+]poststarthook/rbac/bootstrap-roles ok

[+]poststarthook/scheduling/bootstrap-system-priority-classes ok

[+]poststarthook/start-cluster-authentication-info-controller ok

[+]poststarthook/start-kube-aggregator-informers ok

[+]poststarthook/apiservice-registration-controller ok

[+]poststarthook/apiservice-status-available-controller ok

[+]poststarthook/kube-apiserver-autoregistration ok

[+]autoregister-completion ok

[+]poststarthook/apiservice-openapi-controller ok

healthz check passedMaintaining your Kubernetes cluster’s optimal performance is important to avoid wasting computing power and valuable resources.

It improves scalability, efficiency, and overall application performance.

By incorporating this Kubernetes global health checklist into your DevOps strategy, you can enhance your applications and ensure a smooth operation.

Similarly, you can also learn about how to Change Nginx index.html in Kubernetes with ConfigMap.

List of Cluster Health Conditions

Following are the lists and definitions of the available health conditions for a Tanzu Kubernetes cluster.

- Ready: Tells us how well the main Cluster API object is doing.

- Deleting: This means the object is in the process of being removed.

- DeletionFailed: This shows that there were issues deleting the object, and the system will try again.

- Deleted: Indicates the object has been successfully removed.

- InfrastructureReady: This gives a quick overview of how the infrastructure (the base setup) for the cluster is doing.

- WaitingForInfrastructure: Tells us the cluster is waiting for the basic setup to be ready. If the infrastructure doesn’t report it’s ready, this condition acts as a backup.

- ControlPlaneReady: Informs us that the main control part of the cluster is ready.

- WaitingForControlPlane: Similar to WaitingForInfrastructure, it means the cluster is waiting for the control part to be ready. If the control part doesn’t report it’s ready, this condition acts as a backup.

Understanding these conditions including kubernetes monitoring Prometheus, helps us know if the cluster is working well, if it’s in the process of being removed, or if there are any issues with the basic setup or control part of the Tanzu Kubernetes cluster.

Additionally, learning how to create Docker images smoothly connects with managing and improving these clusters.

Condition Fields

Each condition in the health status may have several fields providing detailed information:

- Type: Describes the specific condition, such as ControlPlaneReady. When it comes to the Ready condition, it acts as a summary of all other conditions.

- Status: Indicates the state of the condition, which can be True, False, or Unknown.

- Severity: Classifies the severity level of the reason. “Info” implies that reconciliation is ongoing, “Warning” suggests something might be amiss and requires a retry, and “Error” indicates a problem requiring manual intervention to resolve.

- Reason: Offers an explanation as to why the status is False. It could be due to waiting for readiness or a failure reason. Typically, this is triggered when the status is False.

- Message: Presents human-readable information that elaborates on the Reason field. It serves as a clear description to understand the specifics of why a particular status is False.

Conclusion: How to Check Kubernetes Cluster Health Status

Hopefully, this blog on how to check Kubernetes Cluster Health Status has told you about the effective management of a Tanzu Kubernetes cluster. It involves a proactive approach to health monitoring.

The Tanzu Kubernetes Grid Service and API endpoints like Healthz, Livez, and Readyz provide vital insights while crafting template Kubernetes YAML files to ensure smooth deployment and management.

Commands such as `kubectl describe cluster` aid in quick health checks and issue diagnoses.

The exhaustive list of cluster health conditions, along with their fields, offers a detailed understanding of the operational state.

By integrating a Kubernetes global health checklist into the DevOps strategy, administrators ensure optimal performance, scalability, and efficiency, preventing errors and disruptions in the dynamic Tanzu Kubernetes environment.

Check out the best Kubernetes Tutorial for Beginners if you have already started learning about Kubernetes, and enroll yourself in your preferred courses using CKA 50% Discount 2024.

Resource: